FILM AND VFX TESTS POWERED BY AI.

*For AI & VFX consultancy services, please fill out the contact page form.:

{MAR 2026]

VACACIONES DE TERROR 3.0

A tribute to René Cardona III, Pedro Fernández, Gabriela Hassel, and the Mexican cinema’s own Evil Dead.

Tools used: Seedance 2.0 (courtesy of YouArt), Google Nano Banana 2.0 and Kling Motion Control (courtesy of Runway Workflows CPP), Kling 3.0 (courtesy of Leonardo CPP), Nuke, Suno, Photoshop, and DaVinci Resolve.

[FEB 2026]

SEEDANCE 2.0 TEST

A significant leap in ByteDance’s new multimodal audio-video model, moving beyond the uncanny valley we’ve seen in other tools.

[OCT 2025]

VFX/AI BREAKDOWN of my work by VP LAND

Breakdown by Addy Ghani and Joey Daoud from VP Land (Denoised Podcast), discussing the AI/VFX exploration I did using Wan 2.2, Beeble, Nano Banana and Foundry Nuke. Btw, to overcome the resolution limitations of the clips, I used Topaz Labs Astra for upscaling.

[ OCT 2025]

WAN 2.2 ANIMATE TESTS

Experimenting with WAN 2.2 ANIMATE in combination with NANO BANANA, BEEBLE, TOPAZ ASTRA and NUKE for VFX enhancements, replacements and full-head de-aging.

EXPERIMENTING WITH SORA 2 PRO

."Los Consejos del Santo" - Experimentando con animación creada con Sora2 Pro, Seedance Pro, Nano Banana, DaVinci Resolve y Topaz Astra.

[JULY 2025]

SUPERMAN & LOIS: AI RELIGHTING WITH BEEBLE SWITCHLIGHT

Featured at Siggraph 2025 in Vancouver, Canada

FULL ARTICLE WITH INTERVIEW WITH ANDRES REYES BOTELLO & FREDDY CHAVEZ OLMOS:

[JUN 2025]

LUMA'S DREAM MACHINE Before/After Breakdown [Modify Video]

Across film, advertising, and visual media, working with babies has always been a challenge. Now, new technology gives us another alternative.…

Created with Dream Machine's new Modify Video tool (Vid2Vid early access) by LUMA AI

[MAR 2025]

SUPERMAN & LOIS MACHINE LEARNING CASE STUDY

A case study on our implementation of Wonder Dynamics' AI-powered markerless motion capture technology for television in Superman & Lois. This process not only accelerated previsualization and provided the stunt department with the flexibility to perform without technical gear constraints but also enhanced Boxel Studio’s animation pipeline. It allowed vfx artists to record their own motion capture performances as a starting point, which was later polished in Cascadeur and Autodesk Maya based on client feedback.

Learn more about our collaboration:

https://www.awn.com/vfxworld/wonder-dynamics-helps-boxel-studio-embrace-machine-learning-and-ai

[FEB 2025]

PIKASWAPS by Pika Labs

Testing Pika’s new Modify Region tool “Pikaswaps”, which allows you to specify what you want to change in video footage and what you want to replace it with, using prompts, a paint brush and image references. This tool clearly shows how rapidly this tech is advancing. Grateful to be collaborating with Pika’s research scientist team as an early tester, helping to refine the tool and explore new use cases. Although it’s not Hollywood QC ready yet (I have been working in the vfx industry for 20 years), it still blows my mind that this is even possible and where it will keep evolving. There are sure to be plenty of exciting video-to-video releases this year, and this is definitely something that will keep improving.

Stock footage by Action VFX.

[FEB 2025]

PROJECT STARLIGHT by Topaz Labs

Testing Topaz Labs' Project Starlight, a new AI diffusion-based video enhancement model. It's quite impressive and fast too!

[FEB 2025]

PIKADDITIONS by PIKA LABS

Testing multiple takes using Pika's new feature, Pikadditions, which allows you to add AI elements to existing live action footage. I was surprised that it not only extended my shoulder and arm to accommodate the capybara sitting on it but also added interactive shadows on me. Looking forward to future updates of this tool, especially once alpha export and other controls become available.

[JAN 2025]

FADING LIGHTS - A surreal experimental piece exploring the fragility of mortality, created using Pika 2.1, Runway Frames, Magnific and Suno.

[JAN 2025]

PIKA 2.1

Testing Pika's upcoming img2vid model 2.1 (1080p)

A significant improvement in facial movement for non-human characters.

[JAN 2025}

LUMA RAY 2 & MMAUDIO

Test using Luma AI newly released video model, Ray 2 (text-to-video/720p). There is a significant improvement in physics understanding compared to the previous model. Sound created with MMAudio which is also a pretty remarkable new tool to generate audio from video.

[JAN 2025]

CELEBRITY DEADMATCH - Edición Especial México

Stop-motion style test using GenAI tools to generate in-betweens and simulate claymation movement.

[DEC 2024]

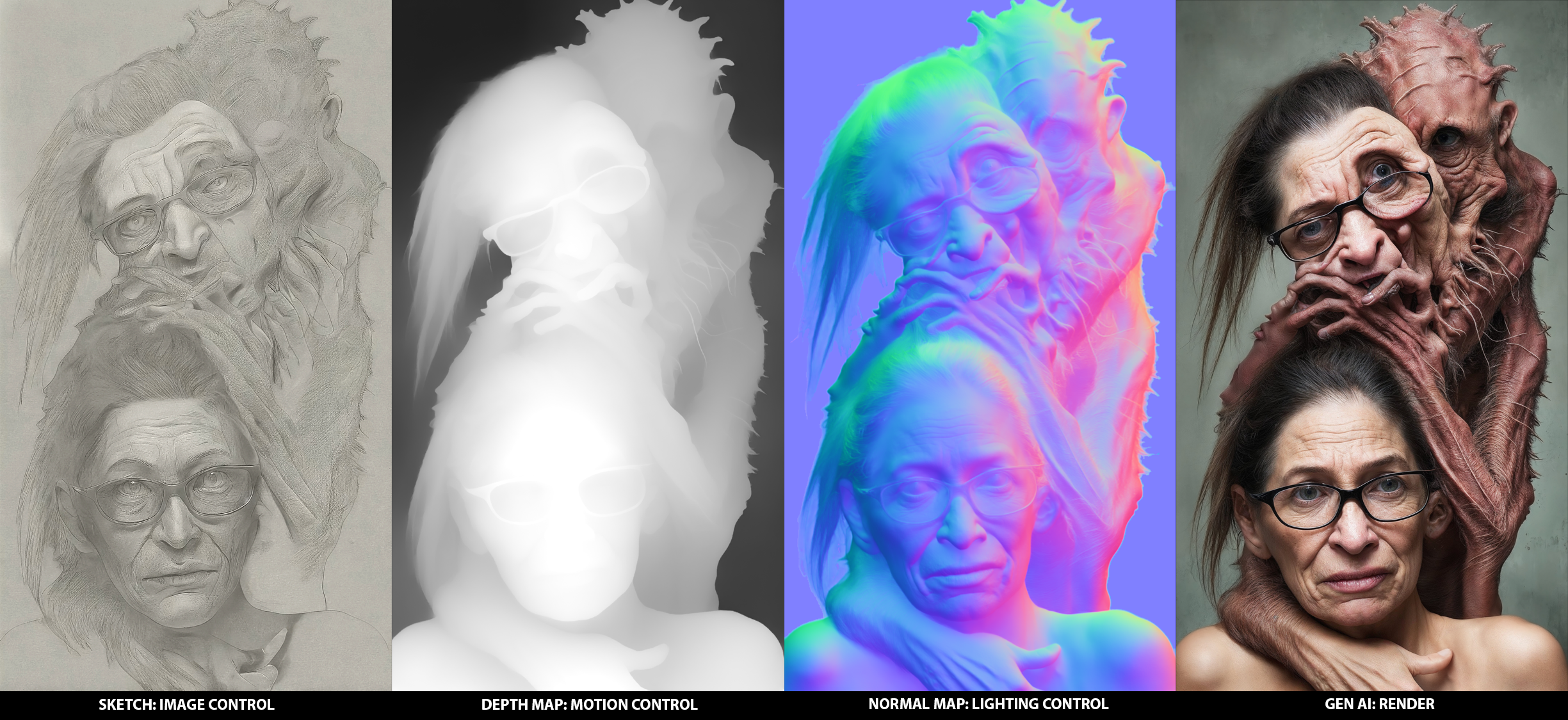

PORTRAITS WITH OUR INNER-DEMONS

"Inspired by the curated self-images we present on social media, where positivity often masks our true selves to avoid judgment, this work reveals the hidden struggles we tend to conceal. Through captivating portraits that depict individuals confronting their inner demons, Freddy merges art with emerging technologies. Exhibited in galleries and live events in the United States (Houston, Texas) and major Mexican cities such as Guadalajara and Puebla, these pieces invite us to make peace with our inner struggles, embracing them as essential aspects of our humanity."

Tools used: Photoshop, Leonardo, Magnific, Beeble Switchlight, Foundry Nuke, Davinci Resolve & Suno.

[NOV 2024]

VANCOUVER CANUCKS - SEASON OPENING VIDEO

I had the opportunity to co-write and help concept visual ideas during pre-production using Leonardo AI, which served as creative guides for the film production of the Vancouver Canucks’ opening video. The final piece was showcased on the jumbotron at Rogers Arena in front of more than 18,000 fans throughout the hockey season.

[Dec 2024]

Going back in time to 1983 with Pika 2.0

[Sep 2024]

In this exclusive behind-the-scenes featurette, Tom addresses the swirling online debate over AI usage in his latest horror film, "Mutant Horizon."

DISCLAIMER: This content has been generated using AI technology for parody purposes only and is entirely fictional. It serves to demonstrate the current capabilities of generative AI technology.

Tools used: Hailuo Minimax

[Aug 2024)

Ten years ago, I was part of the vfx crew on Neill Blomkamp's film "CHAPPIE". It's amazing to reunite with Chappie once again using an iPhone and Simulon (Beta), and have complete creative control of a CG Character on a mobile device.Chappie's voice created with ElevenLabs.

[Jun 2024)

Testing the new KREA Video currently in Beta.

(Jun 2024)

TESTING TESLA FULL SELF-DRIVING (FSD) IN VANCOUVER, CANADA.

[May 2024)

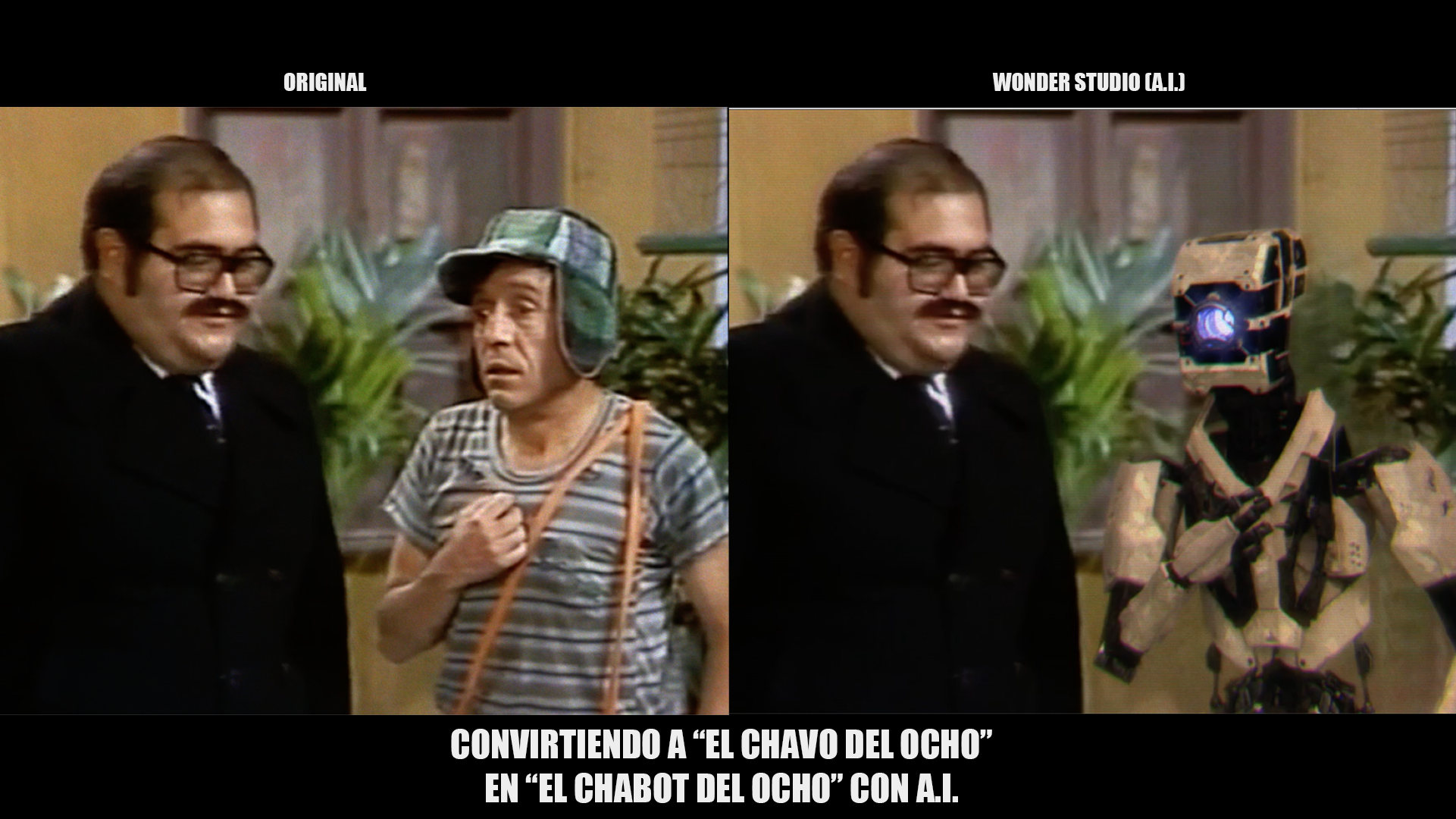

[ES] Combinando y testeando algunas técnicas de VFX y AI para recrear al personaje de Don Ramon y El Chavo del Ocho en los años 70s.

[EN] Testing some VFX and AI techniques and workflow to recreate the characters of a Mexican TV show from the 1970s.

[Abr 2024)

I had the chance to early test Beeble Switchlight's Plugin for Foundry Nuke. Pretty incredible! It's one of the many new AI-powered innovative tools coming out soon in their Switchlight Studio Release. Oh, and now you can also extract ambient occlusion in addition to normals, albedo, specular, roughness, depth, and LDR-to-HDR light maps.

Plate Footage by ActionVFX

Music by Mubert

Mar 2024

A new tool worth sharing. In this test, DUST3R estimated camera positions and generated a point cloud using single frames of multiple shots in a scene with minimum or no overlap in just a few seconds using a RTX4090. The tool currently allows to only export a GLB file but it looks quite promising where this is going once it's able to generate moving cameras.

Feb 2024

We have partnered up with Beeble Switchlight to to find solutions for the inconsistent lighting on greenscreen driving plates. It's been almost a year since Switchlight first caught my attention. Initially developed as an app for relighting single frame portraits, I had the pleasure of meeting Hoon in person during last year’s Siggraph event in LA. We quickly became good friends and realized the tool's versatility in addressing common issues in VFX. Despite still being in beta and developing to a professional level, Switchlight has evolved into a more robust tool, capable of extracting Normals, Albedo, Specular, Roughness, and Depth maps from a plate. Moreover, it can generate an HDR from an LDR background plate and a Neural Render for use in compositing along with other maps. I hope that tools like these can help overcome the negative stigma around AI/ML and showcase their potential for real-world applications.

[Jan 2024)

Bye-Bye was shot in only 2 hours in a virtual production stage. These are the VFX Breakdowns featuring GenAI and Machine Learning tools such as Nuke’s Copycat, Leonardo and Photoshop’s Generative to assist and speed up the post-production process.

[Dec 2023)

Real-Time GenAI is progressing rapidly, now processing at 24fps and 30fps with a webcam. Although this tech still needs to achieve greater consistency and resolution, it's very exciting to test ideas and see instant results thanks to what @burkaygur and @fal_ai_data are building.

[Nov 2023)

Taking stills from the uncanny valley for a makeover with Magnific.ai

Music by Mubert

[Oct 2023)

[EN] Excited about the future of this tech once it runs 24fps, especially when combined with practical effects. AI real time rendering will open new doors for filmmakers. In this example, I used an animatronic that was built for one of my previous horror short films. Next time I will need to record myself wearing a greenscreen spandex ;)

Warning, some of these images might cause nightmares.

[ES] Emocionado por el futuro de esta tecnología una vez que corra a 24 fps, especialmente cuando se combina con efectos prácticos. Real-time rendering con AI abrirá nuevas puertas para los cineastas. En este ejemplo, utilicé un animatrónico que se hizo para uno de mis cortometrajes de terror anteriores. La próxima vez necesitaré grabarme usando spandex verde ;)

Advertencia, algunas de estas imágenes podrían causar pesadillas.

[Sep 2023)

[EN] Testing the new lip-sync AI tool for language translation by HeyGen (currently in Beta). It not only generated Spanish, French, Hindi, Italian and Portuguese translations but also maintained a similar tone to the original. Additionally, I ran the tool a few times using the same footage, and it provided a few variations of each translation. The integration of this tech into real-time video conferencing may be on the horizon.

*Footage Property of WingNut Films/TriStar Pictures. For Educational Purposes Only.

[ES] Test de la nueva herramienta de AI para lip-sync de traducción de HeyGen (actualmente en Beta). No solo generó traducciones en Español, Francés, Hindi, Italiano y Portugués, sino que también mantuvo un tono similar al original. Además, al usar la herramienta varias veces utilizando la misma grabación, generó variaciones de cada traducción. La integración de esta tecnología en videoconferencias en tiempo real podría no estar muy lejos.

[Aug 2023)

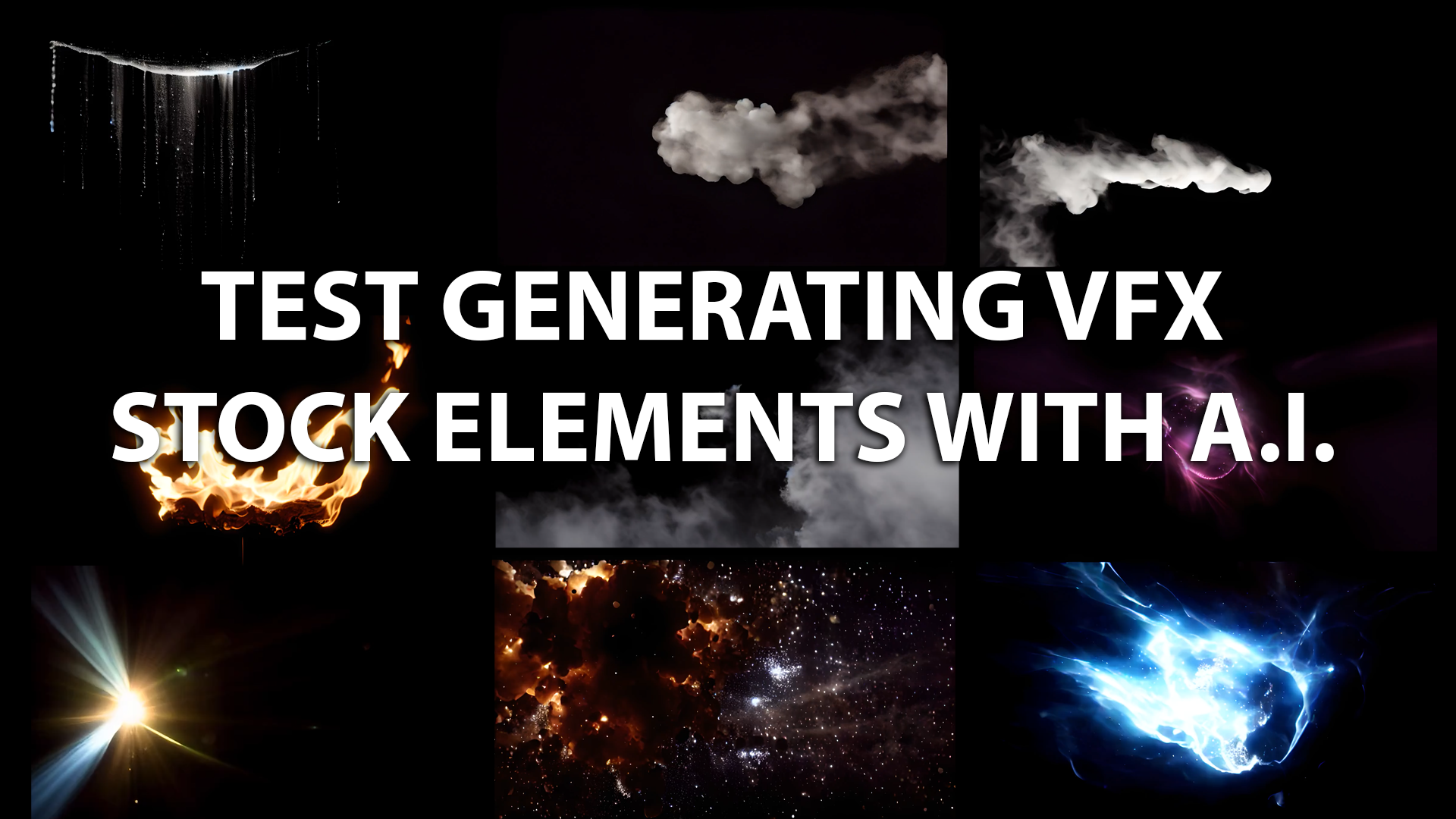

[EN] Test generating stock elements for vfx with Gen-2 from Runway and using the interpolation and upres features to improve the quality. This is still in its infancy stage of course but shows the potential where this generative tech is going with potential applications in vfx. Improvements in resolution, dynamic range, frame rate (among other things), is something we will probably see in the next Runway Gen versions.

Music created with Mubert.

[ES] TEST GENERANDO ELEMENTOS STOCK DE VFX CON I.A.

Test generando elementos de stock para vfx con Gen-2 de Runway usando las funciones de interpolación y upres para mejorar la calidad. Por supuesto, esto todavía está en su etapa inicial, pero muestra el potencial hacia dónde va esta tecnología generativa con aplicaciones a vfx. Mejoras en resolución, rango dinámico y frame rate (entre otras cosas), es algo que probablemente veremos en las próximas versiones de Runway Gen.

Música creada con Mubert.

[Jul 2023)

Short test using a customized model in Leonardo Ai, ControlNet and Foundry Nuke’s CopyCat.

[Jun 2023)

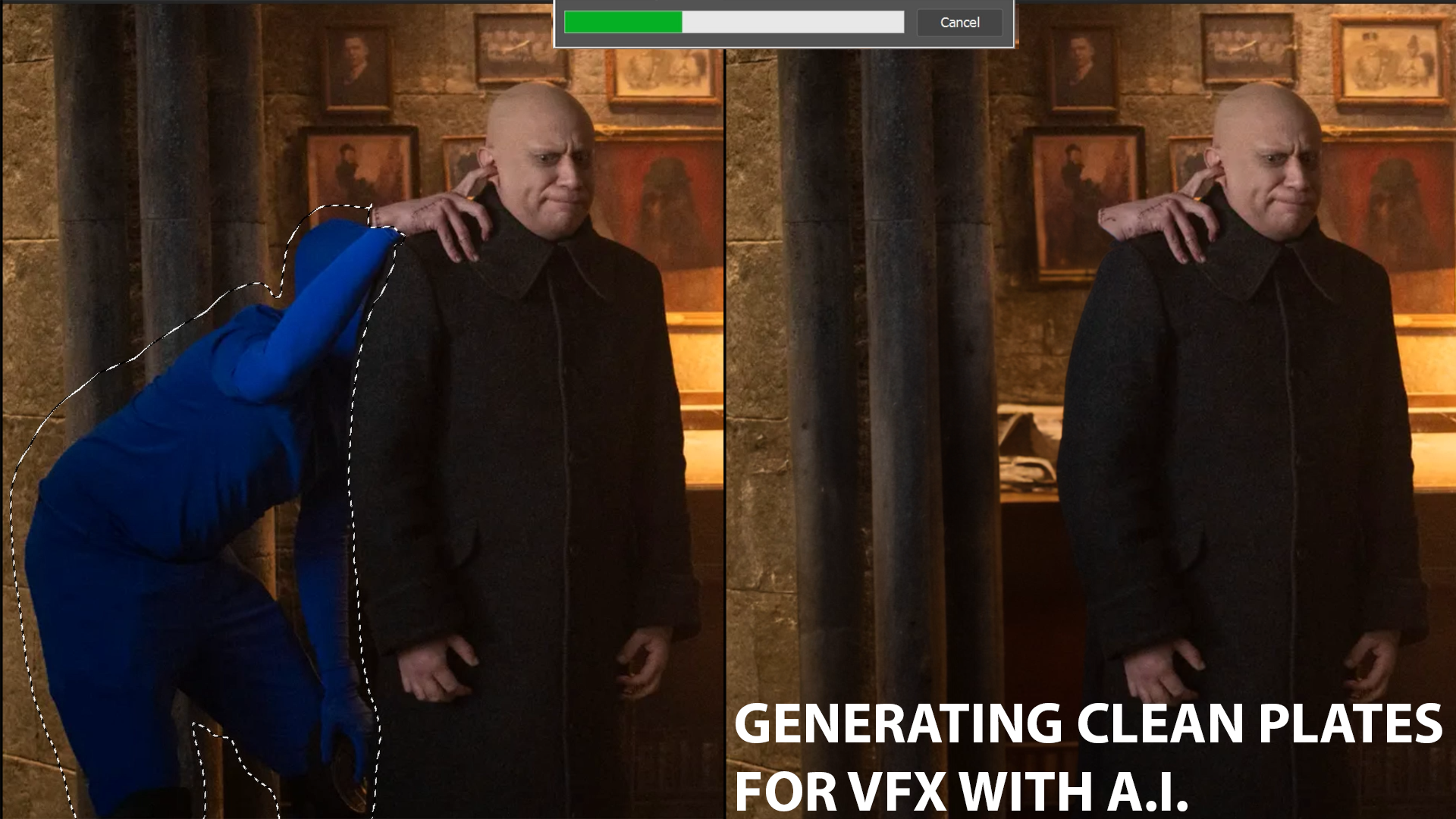

[EN] TEST GENERATING CLEAN PLATE FRAMES FOR VFX WITH A.I.

The new generative fill tool in Adobe Photoshop is simply amazing. Soon, having no access to clean plates in some situations won’t be the end of the world and paint tasks will be less time consuming.

Although the tool is not tech perfect by any means, that can change rapidly when this feature moves out of beta. A real clean plate will always be ideal but for those situations when there is none, this tool can make a big difference and assist artists in a meaningful way.

Music created with Mubert.

*Still Images property of VLAD CIOPLEA/NETFLIX. For Educational Purposes Only*

[ES] TEST GENERANDO FRAMES DE CLEAN PLATES PARA VFX CON I.A.

La nueva herramienta de Photoshop esta increíble. Pronto, el no tener acceso a clean plates en algunas situaciones no será el fin del mundo y los tasks de paint consumirán menos tiempo.

Música creada con Mubert.

*Imágenes propiedad de VLAD CIOPLEA/NETFLIX. Solo con fines educativos*

[May 2023)

[EN] CREATING DIGITAL MAKEUP FX CONCEPTS WITH A.I.

Test using Leonardo AI, Foundry Nuke’s CopyCat and EbSynth. Leonardo’s ability to use customized models and controlNet features allows you to do some pretty fun stuff for horror projects.

Music created with Mubert.

*Footage property of Paramount Pictures. For Educational Purposes Only*

[ES] CREANDO EFECTOS DE MAQUILLAJE DIGITAL CONCEPTUAL CON A.I.

Test utilizando Leonardo AI, CopyCat de Nuke y EbSynth. La capacidad de usar Leonardo para utilizar modelos personalizados y funciones de controlNet te permite hacer cosas bastante divertidas para proyectos de terror.

Música creada con Mubert.

*Material propiedad de Paramount Pictures. Solo con fines educativos*

[Abr 2023)

CONVIRTIENDO A “EL CHAVO DEL OCHO” EN “EL CHABOT DEL OCHO” CON A.I.

[ES] Mi primer test usando Wonder Studio (Beta) reemplazando a el Chavo del Ocho con un Bot. Por el momento, Wonder Studio es limitado en los tools que genera para obtener control del CG y el Clean Plate dentro de su plataforma. Incluso el Alpha que te genera, no es del CG sino del Clean Plate por lo que es difícil hacer cualquier modificación en el personaje 3D a menos que hagas las modificaciones en el archivo de Blender. Estoy seguro que en futuras versiones esto se irá implementando. Por ahora, mi workaround fue hacer un difference key en Nuke y de esa manera obtuve a el alpha del personaje para añadirle compresión y artefactos para que se integrara mejor en el plate. Wonder Studio acaba de implementar una herramienta para añadir tu propio personaje 3D por lo que vendrán más updates y tests de este app. A pesar de esto, es increíble ver lo que Wonder Dynamics ha logrado usando A.I. en tan poco tiempo. Recomiendo esta entrevista de Allan McKay con Nikola Todorovic (Co-Fundador y CEO de Wonder Dynamics). Es muy inspirador escuchar su visión:

https://www.youtube.com/watch?v=qRZXWtACIdc&t=1465s

[EN] My first test using Wonder Studio (Beta) replacing El Chavo del Ocho with a Bot. At the moment, Wonder Studio tools are limited when it comes down to controls for the CG and Clean Plate when working within its platform. The Alpha it generates is not for the 3D character but rather for the Clean Plate, so it is difficult to make any further tweaks to the CG in the renders unless you make those modifications using the Blender file. I have no doubt that in future versions this will be implemented. For now, my workaround was to do a difference key in Nuke to obtain the alpha for the character to add compression and artifacts so it feels more integrated to the plate. Wonder Studio has already implemented a feature to add your own character, so more updates and tests for this app are in the works. Despite all of this, I'm amazed to see what the team at Wonder Dynamics have achieved with A.I. in such a short time. If you haven't already, I would recommend to listen to Allan McKay 's interview with Nikola Todorovic (Co-Founder and CEO at Wonder Dynamics). It's very inspiring to hear his vision:

https://www.youtube.com/watch?v=qRZXWtACIdc&t=1465s

[Mar 2023)

[EN] DEAGING MICHAEL KEATON AS "BATMAN" (1989) IN "THE FLASH" TRAILER (2023) USING A.I.

New test using DeepFaceLab, Photoshop Neural and Blackmagic Design DaVinci Resolve’s Magic Mask.

Music created with Mubert: music powered by A.I.

*Trailer Footage Property of Warner Bros / DC. For Educational Purposes Only*.

[ES] REJUVENECIENDO A MICHAEL KEATON COMO "BATMAN" (1989) EN EL TRÁILER DE "THE FLASH" (2023) USANDO A.I.

Nuevo test usando DeepFaceLab, Photoshop Neural y Magic Mask de Blackmagic Design DaVinci Resolve.

Música creada con Mubert.

*Footage del Tráiler es Propiedad de Warner Bros / DC. Solo para fines educativos.*

[Mar 2023)

The new Switchlight app by Beeble allows you to key, add a background and relight a single frame. The Beta Tester version has very limited features at the moment but it has lots of potential for Compositing vfx. In this test, I used a jpg image from FxGuide featuring The Mandalorian over a green screen. I ended up denoising/upresing to 6K using Topaz Gigapixel and then added the grain back in Photoshop. Once the app developers add features such as HDR inputs/outputs, different aspect ratios, per light/color/edge controls, customized backgrounds, this tool will be something to watch out for. Nuke plugin anyone?

Music created with MUBERT: music powered by AI.

*Green screen footage Property of Disney. For Educational Purposes Only.

[Mar 2023)

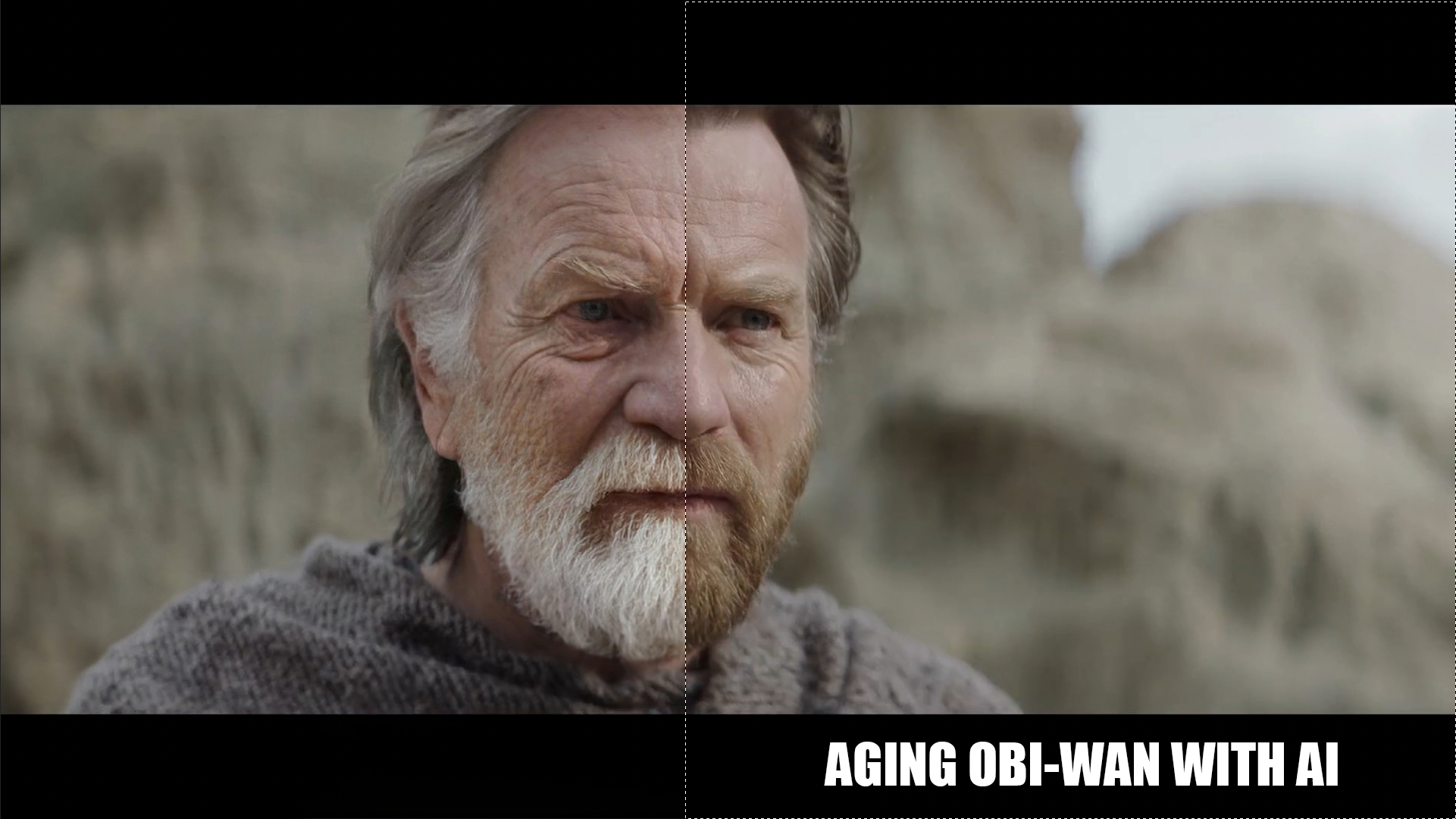

Personal test using AI to age Ewan McGregor as an older Obi-Wan Kenobi. Something that could look cool in a flash forward scene. Lots of trial/error on this attempt learning tools like ebsynth, stable diffusion, photoshop neural, etc…

Music created with AI using AIVA.

*Footage Property of Disney. For Educational Purposes Only.

[Mar 2023)

Testing some aging techniques with AI as a proof of concept for potential proposals and uses in music videos.

[Feb 2023)

Test creating parallax in Midjourney's v5 environment images using AI generated depth maps and Pixop Deep Restoration for Upres.

Music created with AI using AIVA

[Feb 2023)

Generating photorealistic CG on a smartphone using 3D assets and AI with the Simulon App (currently in Beta). At the moment the beta version of the iOS app only allows you to generate full-res renders on a still frame using HDRIs but I went a bit further using EbSynth to reproject the render on the AR geo already available in the app to add motion. The app developers are working on some new great features like point light controls, better tracking and other cool stuff coming up soon.

Music created using Mubert: music powered by AI